Yoti blog

Stories and insights from the world of digital identity

Explore cards - What are Yoti cards and how do we use them in the Digital ID app?

Making Yoti relevant and useful whilst preserving privacy We want to make sure that the Yoti app is useful to our whole community. We built Explore – a place where you can discover where you can use your digital ID. We also have some special offers for community members. This will look exactly the same in the Post Office EasyID app. Country-specific content In your digital ID app, you will only see content that is relevant to your country. This means that we only show you partners that you can use your digital ID with or offers

How digital identity could help make cross-border trading easier

As we go about our social purpose work we regularly get to speak to local, national and international non-profit organisations. Over the years, we’ve found that many struggle to understand the many ways digital identity solutions might help them in their work. As part of our wider efforts to help the sector make sense of the technology, today we’re publishing the second of six articles looking at the use of digital identities in six different humanitarian settings. Please note that, while the technology use-case is real, the scenarios are hypothetical in nature, and the projects do not exist

Making it easier for EU residents to prove their COVID-19 Vaccination status

Vaccine certificates are proving fundamental in safely opening the world up, and being able to prove your COVID status via the Yoti app for travel, leisure and work has been the most requested feature by the Yoti community in recent months. That’s why we’re excited to announce that it is now possible for fully vaccinated people to add their EU COVID-19 Vaccine Certificate to their Yoti app. Add your vaccine certificate to the Yoti app in seconds Before you add your vaccine credentials to your Yoti app, you’ll need to make sure you’ve also added

How digital identity could be used to monitor food and cash rations

As we go about our social purpose work we regularly get to speak to local, national and international non-profit organisations. Over the years, we’ve found that many struggle to understand the many ways digital identity solutions might help them in their work. As part of our wider efforts to help the sector make sense of the technology, today we’re publishing the first of six articles looking at the use of digital identities in six different humanitarian and environmental settings. Please note that, while the technology use case is real, the scenarios are hypothetical in nature, and the projects do not

Tackling child sexual abuse online with the Safety Tech Challenge Fund

We’re delighted to announce that the Safety Tech Challenge Fund 2021 has awarded Yoti and our collaborators with not one, but two rounds of funding. Working in partnership with Galaxkey & Image Analyzer and DragonflAI, we are looking to tackle child sexual abuse and demonstrate how end-to-end encryption and AI-powered image analysis could be used to create secure solutions that protects young people online. Between 2019 and 2020, over 10,000 cases of online child sexual abuse were reported to police forces across the UK. In efforts to create change to prevent such crimes, the UK

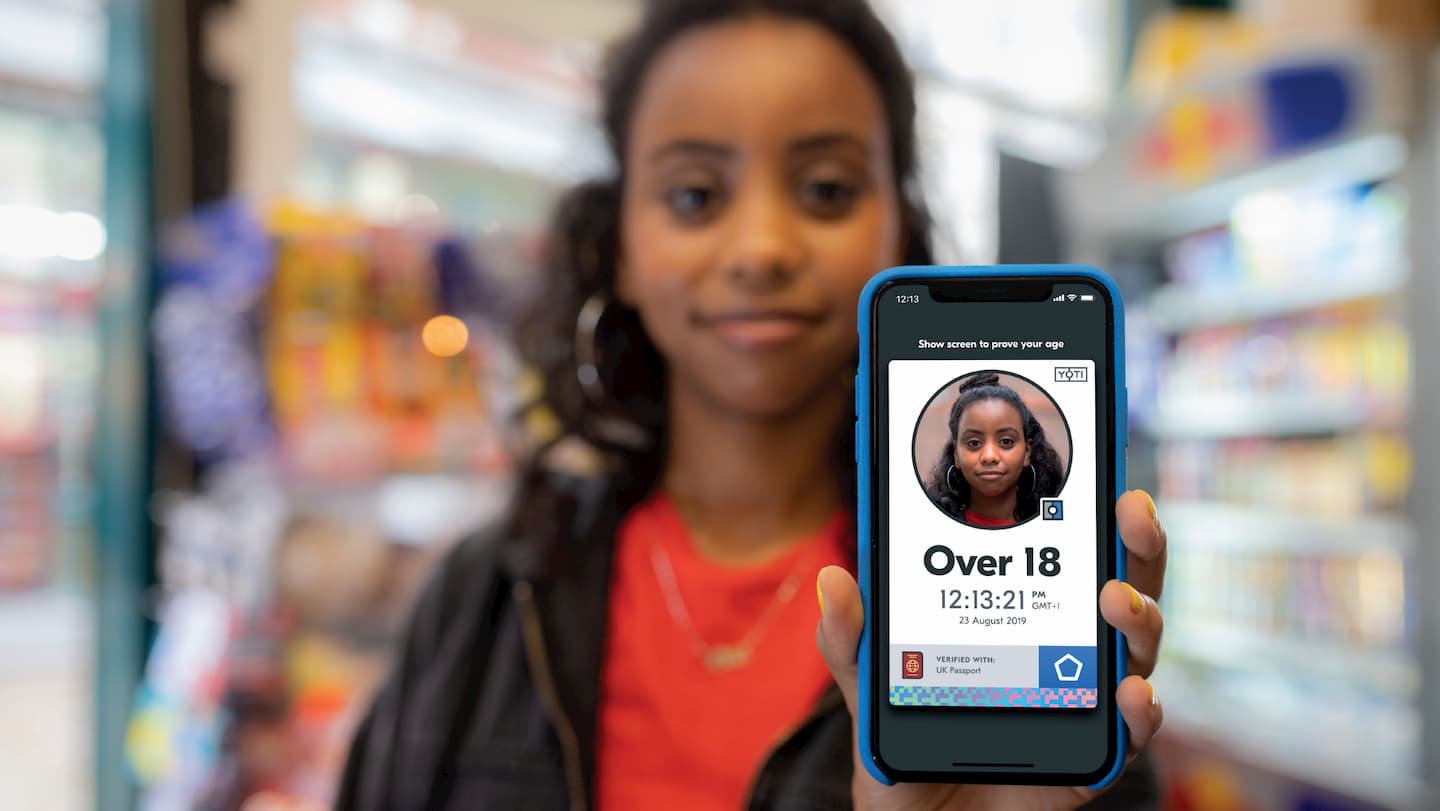

Our age assurance solutions are approved by German regulators KJM and FSM to protect young people online

We’re excited to announce that The Commission for the Protection of Minors in the Media (KJM) has approved our facial age estimation tool (formerly known as Yoti Age Scan) to be used in the German market to protect young people online. You can read the KJM press release here. This follows our approval from the German Association for Voluntary Self-Regulation of Digital Media service providers (FSM) in 2020, which allowed German people to use digital age estimation and age verification technology for the first time ever to access digital adult content. You can read the FSM seal text here.

Browse by category

Essential reading

Get up to speed on what kind of company we are