Age assurance

Why facial age estimation, the most accurate age checking tool, shouldn’t be left on the sidelines

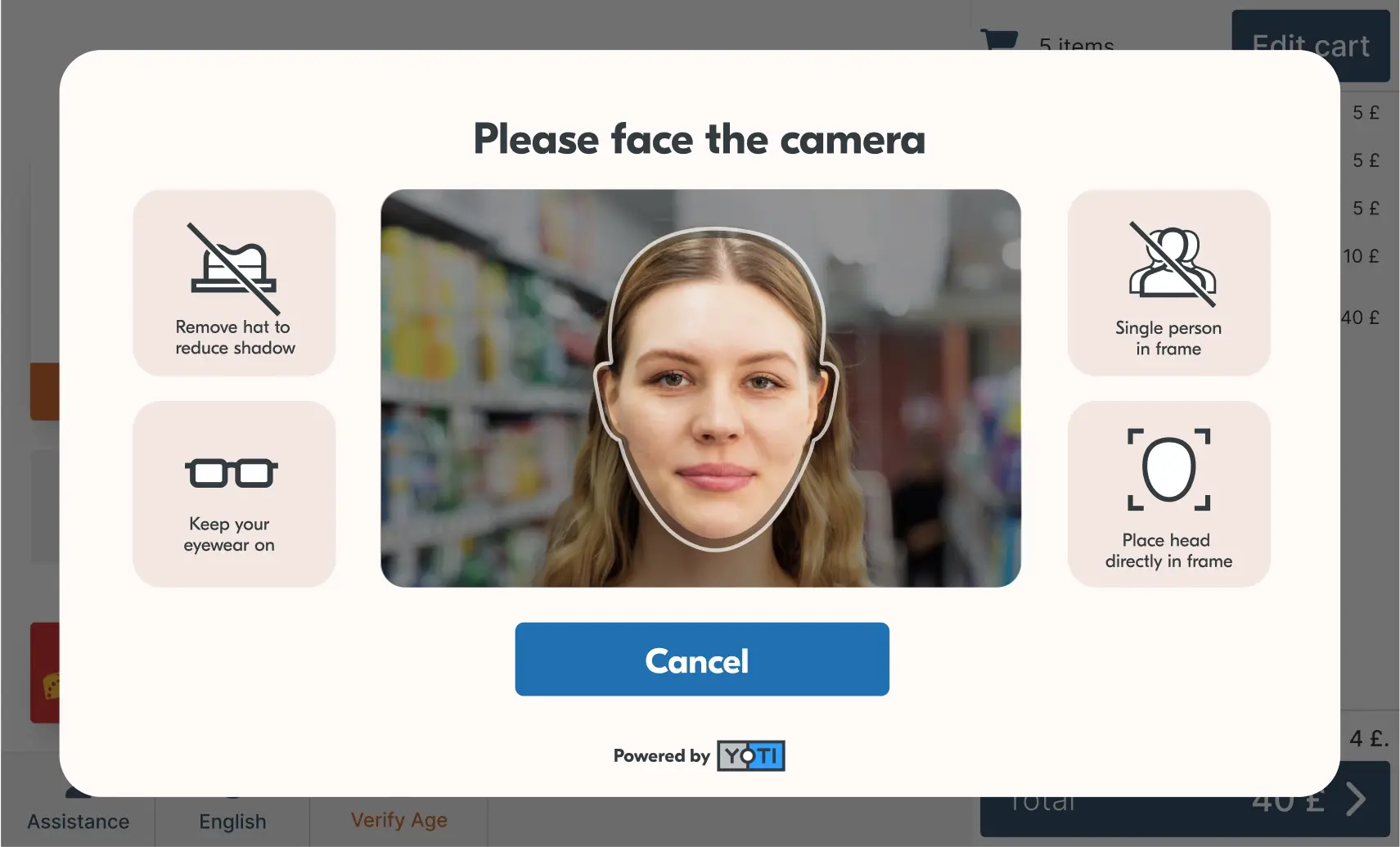

Many of us have been there: standing at a self-checkout, scanning our shopping, only to hit a roadblock when the till flags an age-restricted item like a bottle of wine or a pack of beer. With age verification accounting for between 40 – 50% of interventions at self-checkouts, it significantly disrupts and slows down the checkout experience. We wait for a retail worker to approve the sale. The retail worker does a visual estimation of our age – they look at our face and guess whether we’re old enough to buy the item. Most retailers follow the Challenge 25

Why testing data is as important as training data for machine learning models

When developing machine learning systems for facial age estimation, the conversation often centres on the training data: how much you have, how diverse it is, how inclusive it is, and how well it represents your end users. Not to mention, where the data comes from. Intuitively, that focus makes sense. More data presumably leads to better models. But test data is just as important, and in some ways, even more critical for ensuring models perform effectively. Training data: more isn’t always better Common sense would suggest that for a machine learning model “the more data, the better.” And that’s

Texas App Store Accountability Act: what it means for age assurance worldwide

The State of Texas has passed a landmark law – the App Store Accountability Act – that places legal responsibility for age checking squarely on app store operators. Utah was the first state to enact this type of legislation, now followed by Texas. This new regulatory shift has far-reaching implications for digital safety, privacy and innovation around the world. As an age assurance provider, we believe it’s critical to explain the significance of this development, highlight the practical challenges it raises, and offer a path forward that protects both users and platforms. One of the main weaknesses of this

Yoti responds to the Draft Statement of Strategic Priorities for online safety

Last week, the Department of Science, Innovation and Technology, published the final draft Strategic Priorities for online safety. We welcome the statement, which highlights the five areas the government believes should be prioritised for creating a safer online environment. These areas are: safety by design, transparency and accountability, agile regulation, inclusivity and resilience, and technology and innovation. These priorities will guide Ofcom as it enforces the Online Safety Act, ensuring platforms stay accountable and users are protected. We welcome this clear direction and commitment from the government to create safer online spaces. It’s positive that age assurance has been

Building trust through age assurance

Governments around the world are increasingly prioritising online safety and age regulations, with new laws emerging across multiple countries. This report explores the growing demand for privacy-preserving age assurance and how businesses are adapting to meet regulatory requirements. Using proprietary data, our latest report explores: The growing demand for privacy-preserving age assurance How businesses are adapting to meet regulatory requirements Key trends in age assurance How Yoti’s solutions are protecting young people, safeguarding privacy, and helping businesses implement robust, trusted and effective age checks Read the report

Complying with Ofcom’s Protection of Children Codes: what you need to know about age assurance

With children’s online engagement at an all-time high, the UK government passed the Online Safety Act in 2023, aiming to make the UK ‘the safest place in the world to be online’. It places legal obligations on online services to prioritise user safety, particularly for children. As the UK’s communications regulator, Ofcom plays a pivotal role in enforcing the Act’s provisions. As part of phase two of the Act’s implementation, Ofcom published its Protection of Children Codes of Practice on 24th April 2025. Made up of over 40 practical measures, they outline how digital platforms must safeguard younger users