At Yoti, we perform millions of checks every week for our clients. A critical element of a robust check (whether that’s for age assurance, identity verification, digital IDs or authentication) is liveness detection, also known as Presentation Attack Detection (PAD).

The purpose of liveness detection is simple but essential. It makes sure that the person being verified is physically present in front of the device camera in real time. Without liveness, checks are vulnerable to basic fraud attacks (such as using printed photos or screen replays) and more sophisticated AI attacks (like AI clones). For organisations relying on digital verification, strong liveness is the difference between genuine trust and a false sense of security.

Since we perform so many checks at a global scale, across a diverse set of industries and regions, we are able to identify emerging trends and early threat vectors. This enables us to continuously develop and refine our technology. Our clients and regulators expect our services to be effective, and to perform consistently and accurately across skin tone, gender and age.

Bias and fairness

One of our principles is that Yoti should work for all. We strive to minimise skin tone, age and gender bias across all our products, while also ensuring accessibility.

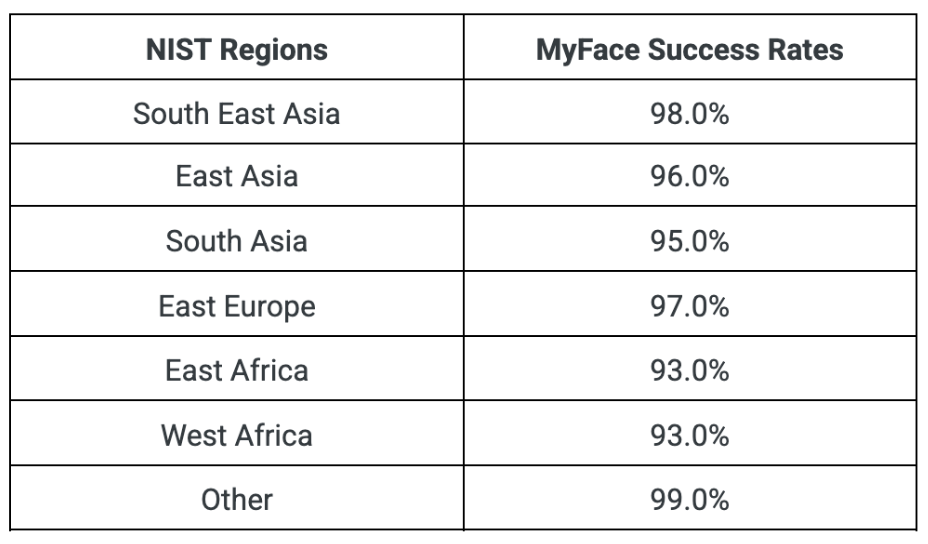

For facial age estimation assessments, the US National Institute of Standards and Technology (NIST) uses country-specific regions as a useful (though imperfect) proxy for evaluating fairness across ethnicities. We use the same regional groupings as an indicator of bias in our MyFace liveness system.

The table below shows MyFace liveness success rates by NIST region on mobile devices.

Performance remains consistently high across regions. However, slightly lower performance in the East and West Africa most likely reflects two factors:

- Whilst smartphone adoption is rising rapidly in East and West Africa, a higher proportion of devices have lower quality cameras and less reliable and fast connectivity. This can affect image capture quality and end-to-end check times, both potentially affecting completion rates.

- We have openly published in our white paper that we have historically had less data from African nations in our training datasets. We are actively addressing this in order to improve performance.

Yoti MyFace success rates over time

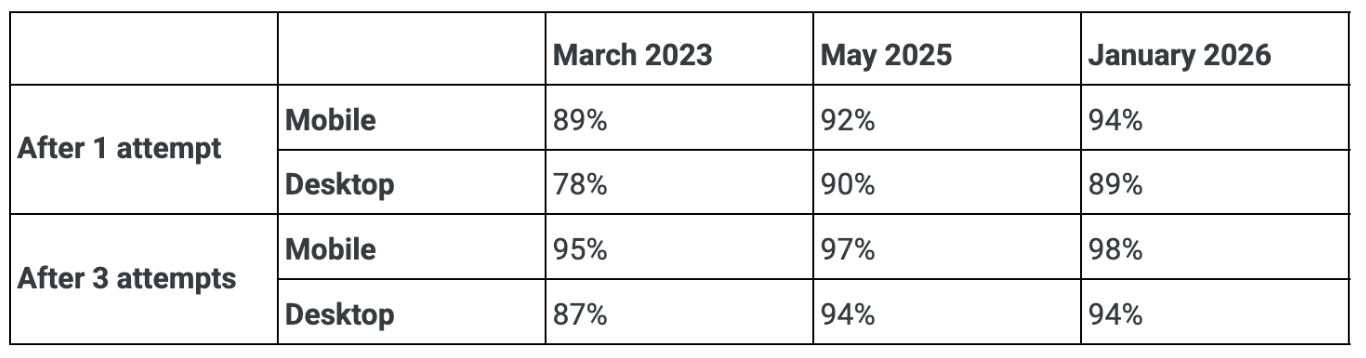

The vast majority (97%) of MyFace liveness transactions are completed on mobile phones, with the other 3% completed on desktops. Typically, users are allowed up to 3 attempts to complete a liveness check. This flexibility helps maximise completion rates for genuine users, particularly where lighting, positioning or familiarity with the process may affect the first attempt.

The table below shows improvements over time.

These improvements likely reflect 3 combined factors:

- Ongoing enhancements to the MyFace model

- Improvements in device camera quality over time

- Increased user familiarity with completing liveness checks

For clients, this translates into higher genuine-user completion rates without lowering security thresholds. In other words, stronger fraud protection does not need to mean greater friction.

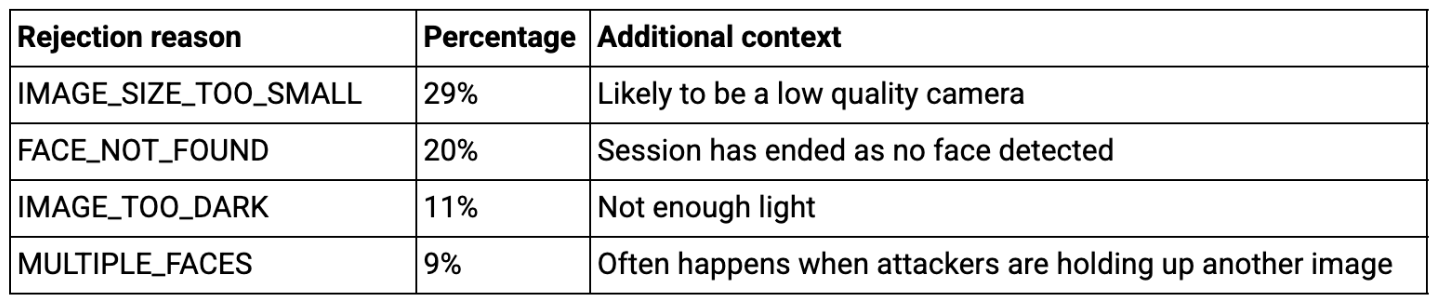

Why would someone be rejected for a liveness check?

Reasons for being rejected during presentation attack detection in 2025 reflect an interesting balance between technology and user issues. We cannot provide all reasons here as that would reveal too much proprietary information. But as an illustration, of the 2% of rejected mobile users in 2025, the principle reasons are outlined below:

In practical terms:

- Image too small – The captured image is too low quality for reliable analysis. This may be due to device limitations or presentation of a photo.

- Face not found – No detectable face is present in the image. This can occur if a user presents a photo or screen image.

- Image too dark – Insufficient lighting prevents accurate analysis to confidently confirm that there is no presentation attack.

- Multiple faces – This tends to happen when an attacker holds up another image or device in view of the camera.

Yoti can deploy our ‘face capture module’ on the client side, ensuring that only images meeting quality thresholds are captured. No unnecessary data is shared.The single image is analysed, and then deleted, with only the result returned to the client. When used client side, the first two rejection reasons are effectively eliminated.

For organisations, this improves genuine-user completion rates while maintaining strong fraud controls. It also supports privacy-by-design principles.

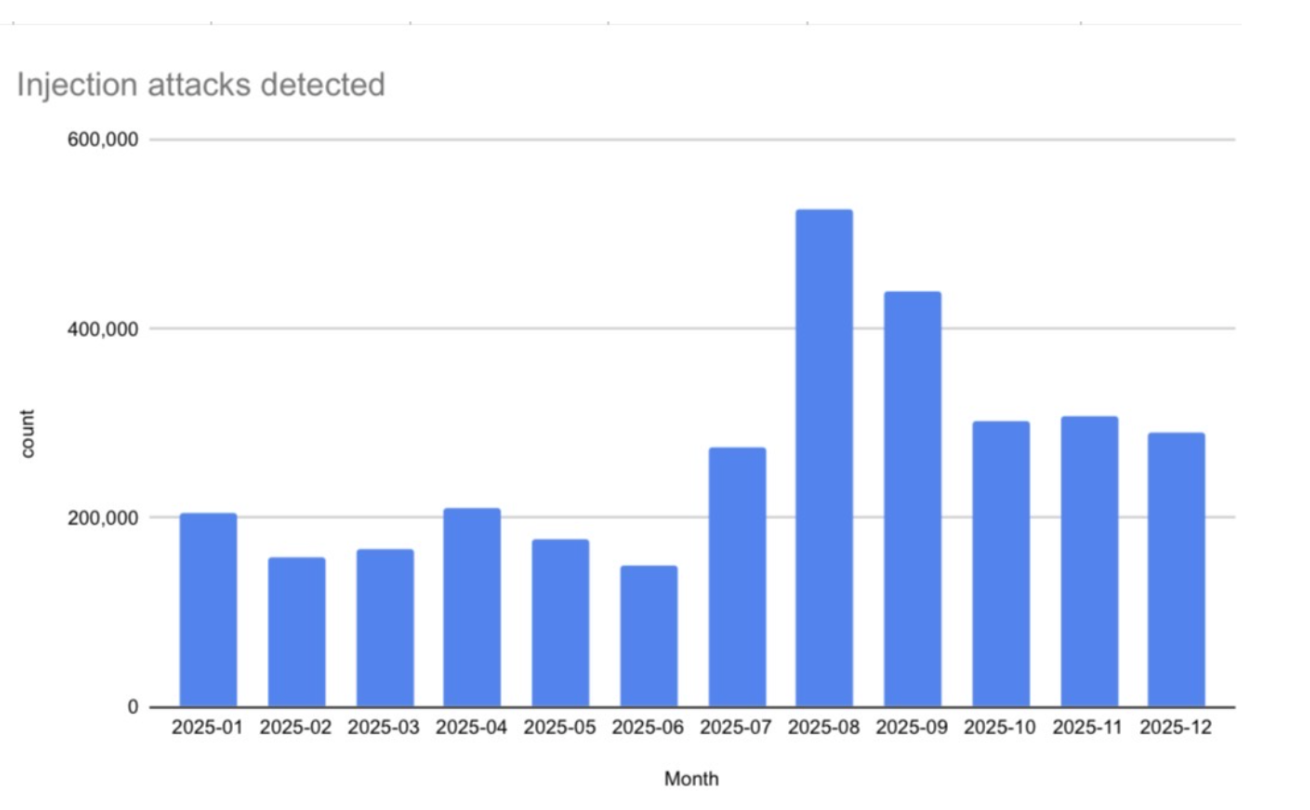

The rise of AI or deepfake injection attacks

In 2025, we witnessed an increase in the number of deepfake attacks, or injection attacks during age and identity verification checks. We detected a total of 3.2 million injection attacks. An injection attack involves feeding synthetic or pre-recorded imagery directly into the verification stream, bypassing a live camera feed.

In absolute terms, this represents a significant rise in the total number of attacks we have detected as our services have grown over the year. We now perform over 8 million checks per week across all our services. With the introduction of various regulations globally, including the UK’s Online Safety Act, organisations have implemented more robust age or identity checks for their users. As a result, this has increased both adoption but also attempted fraud.

We experienced a peak in 527,013 injection attacks in August 2025, owing to the introduction of age checks under the Online Safety Act in the UK.

The improvement in AI model outputs and the falling cost of generative AI tools mean that more individuals can quickly create convincing deepfakes or images of real people. Message boards, online forums and chat rooms openly share tips and tricks on spoofing checks and identifying weaker vendors. The barrier to entry is lower than ever.

Interestingly, following the August peak (when the UK’s Online Safety Act came into effect), we observed a decline. Our assessment is that many individuals attempted to bypass Yoti MyFace liveness checks during the initial regulatory rollout and subsequently shifted their focus elsewhere when unsuccessful. We see very few individuals online claiming they have beaten Yoti MyFace liveness detection.

Independent validation

Yoti’s MyFace® is currently the only liveness model in the world to pass iBeta Level 3 evaluation under ISO/IEC 30107-3 – the highest performance level for presentation attack detection.

Independent testing matters. In a regulatory environment where fairness and security are under scrutiny, objective validation provides assurance that systems perform as claimed. As AI capabilities continue to evolve, high-quality liveness checks are foundational to digital trust.

If you’d like to know more, get in touch or head over to our dedicated page.

– Matt, B2B Marketing Director