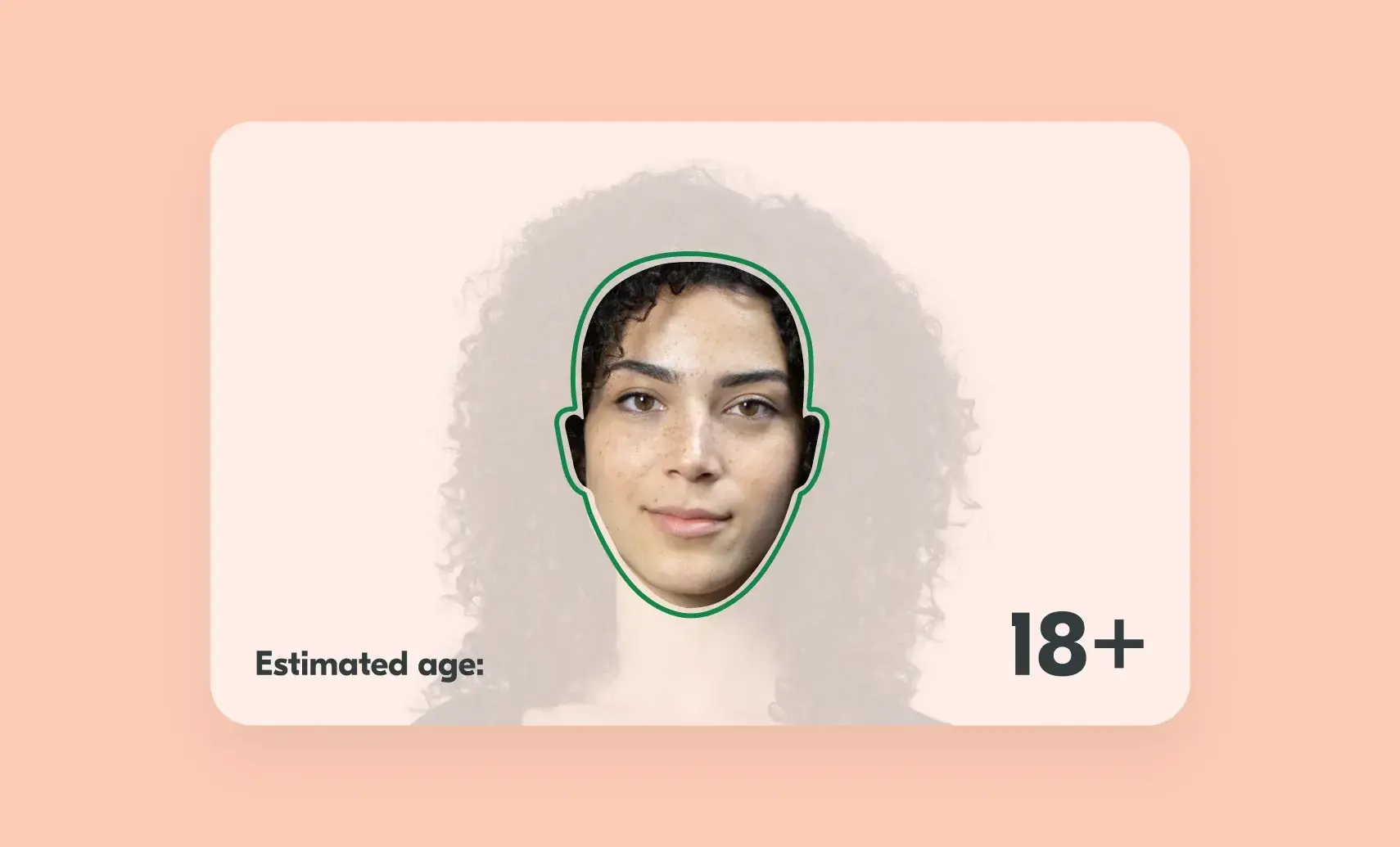

Facial age estimation using machine learning has advanced significantly in recent years. But, a common and fair question still arises: How accurate can it really be? Can a system look at your face and accurately guess your age, especially when humans often get it wrong?

The short answer is that it’s very accurate – but not perfect. We explain why.

The myth of 100% accuracy

It’s important to set realistic expectations. No facial age estimation model can achieve 100% accuracy across all ages.

Human aging is highly individual and shaped by many external factors, especially as we get older. Lifestyle variables such as:

- Smoking

- Alcohol consumption

- Sun exposure

- Stress levels

These all begin to play a much greater role in how our faces age. Even humans struggle to judge age accurately in these cases. But a machine learning model that has well understood, and independently tested, accuracy, can complete a wide range of age checks, without needing to collect personal information or check a government issued identity document.

At Yoti, we operate on a policy of continuous improvement. We are always working to improve our model with new data to improve our accuracy. But, generally speaking, as our model progresses and matures, gains become smaller and more incremental.

The surprising accuracy of teen estimation

Interestingly, the most accurate age bracket for our facial age estimation is between 13 and 18 years old. This may seem counterintuitive. After all, teenagers seemingly grow and mature at wildly different rates. But according to evaluations from the National Institute of Standards and Technology (NIST), our model performs exceptionally well in this range.

Why is this the case? It’s most likely because adolescence, while involving rapid development, also presents more consistent facial cues across individuals than adulthood. Our lived experience might suggest to us that teens are difficult to age, but the data shows otherwise.

Yoti’s accuracy decreases with age, and that’s by design

As users age beyond their mid-20s, facial cues become less reliable indicators of age. A 40-year-old with minimal sun exposure may look younger than a 30-year-old who has lived in harsh outdoor conditions.

Most practical use cases, especially for accessing adult content, e-commerce and social media, don’t require an exact age estimation. Instead, they tend to focus on a simple binary decision: is this person under or over 18? Some have other requirements, such as: is this person aged over 13 or under 18. Rarely is there a need for age estimation technology to distinguish between a 49-year-old and a 58-year-old.

This is why we intentionally optimise our model to perform best within the relevant regulatory age bands and not across the entire human lifespan.

Why a 100% accurate model is not the goal

Theoretically, creating a model that achieves exact facial age estimation results across all demographics would require:

- An enormous and continuously updated dataset, especially as studies show diet and nutrition, lifestyle and healthcare improve over generations

- Rich representation across different ethnicities, skin tones, lighting conditions and image quality types

- Sophisticated controls for bias, fairness and privacy

But such a model would be:

- Impractical to build and train

- Unfeasibly expensive to deploy at scale for most applications to create impact

- Not required for nearly every real-world application

Instead, our approach prioritises relevance, fairness, scalability and efficiency.

Balancing accuracy, fairness, and transparency

Our model is trained and tested on data that has an emphasis on:

- Performance in the 13-25 age group

- Demographic diversity across skin tone and gender

- Resistance against spoofing attempts and robustness in real-world use conditions

We publish our model’s performance results openly, including Mean Absolute Error (MAE) across skin tone, gender and age. No method of age assurance is perfect, but our facial age estimation is constantly improving, as shown and published from our accuracy rates.

Buffers, other age assurance methods and the “waterfall” approach

Yoti facial age estimation strikes a balance between effective age checks with user privacy. With our anti-spoofing measures, facial age estimation is a highly effective way to prevent children from accessing age-restricted content, goods and services. However, we recommend organisations implement safeguards.

- Age buffers – for example, setting a threshold of 23+ when the legal age is 18. This mirrors the existing Challenge 25 retail scenario for age checks in the UK, or in the US for age 30.

For a user age 19 in an 18+ use case, for example, we are able to offer organisations additional methods of age verification.

- The “waterfall” approach – used when facial age estimation, usually with buffers, is the first check, followed by a secondary verification option using any of our other 10+ age checking methods of age checks. So if someone doesn’t pass the facial age estimation check, perhaps because they have been estimated below the threshold, they will then be asked to prove their age another way, for example with our Digital ID app.

Yoti’s age check services include our patent-protected technology, that relates to a process of authorisation by combining age estimation (e.g. facial age estimation) and liveness, failing which there is a verification of an age-related identity attribute such as Digital ID or an ID document.

This layered strategy enhances inclusivity, reduces user friction, and maintains regulatory compliance – without over-reliance on any single age checking method.

Enabling safe and inclusive access

Across the globe, new regulations are emerging that require online age checks online. Facial age estimation offers a privacy-preserving, scalable, and user-friendly way to meet these requirements – filling a gap where no protections safeguards previously existed.

These systems bring meaningful safeguards for children, and reduce dependence on formal identity document verification. At Yoti, our focus remains on delivering trusted, inclusive and ethical technology that supports a safer digital experience for all.