We are delighted to share our latest evaluation by the National Institute of Standards and Technology (NIST) for our newest facial age estimation model. We have seen notable performance improvements across a number of metrics.

NIST’s evaluation is extremely thorough – they have over 20 million images for evaluation – and NIST develops their testing methodology over time. This helps to highlight models that are robust across multiple datasets and scenarios.

Our strategy for developing our model is not “data dependent”. Machine learning models can benefit greatly from quantity of data at the initial stage, but once reaching maturity, there is a significant diminishing return to adding more and more data. We do not use data from our checks, for example our facial age estimation images, nor scrape the web for images.

We do continue to add consented training data, but that is not the primary source of improvements we see over time. Instead, we have built our model to learn various characteristics of an image based on specific outcomes. This means we can scale our model more effectively, react to moving threat vectors, improve efficacy and work to reduce bias.

For example, this means we can train our model to avoid incorrect markers:

- A wrinkled forehead could be a frown or due to the ageing process.

- Take less notice of glasses as they may be more prevalent in older age groups, but are not indicative of age.

- A beard would generally indicate someone is over about 18 years of age for a male, but could also be an easy presentation attack.

This is a challenge to balance out differing signals. Our strategy from the start has been to build a model that performs best to meet better real-world requirements for businesses and regulators. This latest NIST evaluation neatly demonstrates this.

Key takeaways from our latest NIST evaluation

We have seen a number of improvements from our previously submitted model – the key takeaways are:

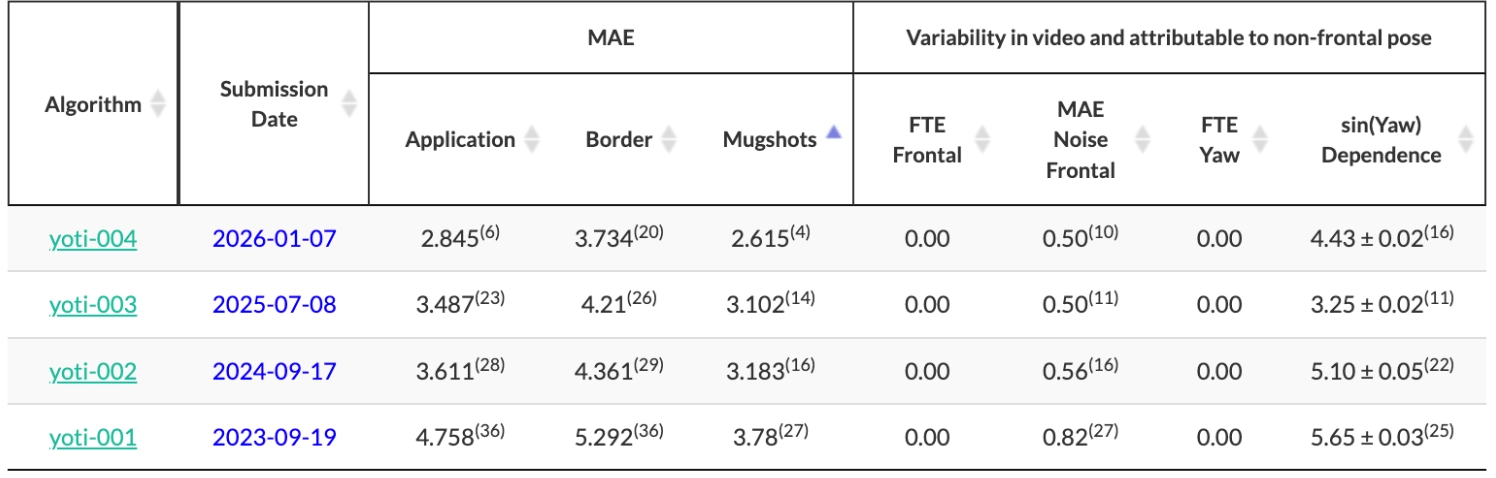

Mean Absolute Error (MAE) has improved from 3.102 to 2.615 for the NIST mugshot data set

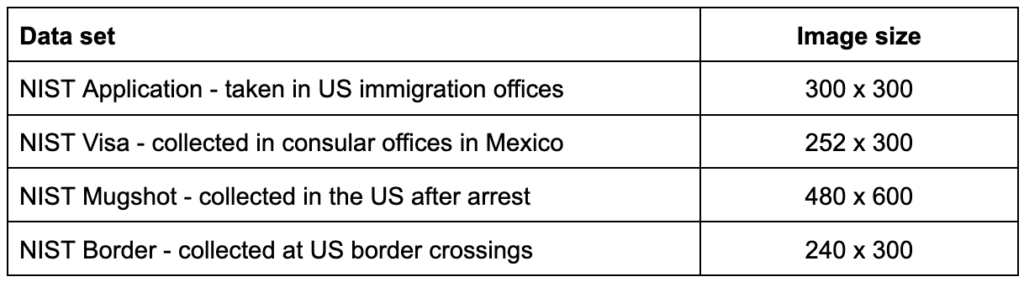

NIST uses multiple datasets, each of which have different characteristics. Principally, they also vary in terms of image quality:

This has a statistically significant effect on model performance. Even though the mugshot data set is only tagged by year of age (not, month, or even day, of birth).

- We consider this the closest dataset to our real world use case due to the higher image quality. Mobile images we typically use are even higher quality at 720 x 800 pixels.

- This takes us from 12th to 3rd best company for MAE.

- The gap to the first place is now just 0.2 years MAE – statistically very low – this equates to just over two months.

Yoti facial age estimation improvement over time

Yoti has submitted four models for NIST evaluation. In the table below you can see how our overall performance for MAE has improved over time across all datasets, but for the mugshot dataset specifically from 3.78 to 2.615. This table shows MAE improvement for the 18-30 age group, stratified across age and gender.

How did we do this?

Not the easy way – older age groups aren’t critical for accuracy with respect to current and impending legislation. The easy way to reduce MAE over an entire dataset would be to reduce the average error, which is much higher, for older demographics.

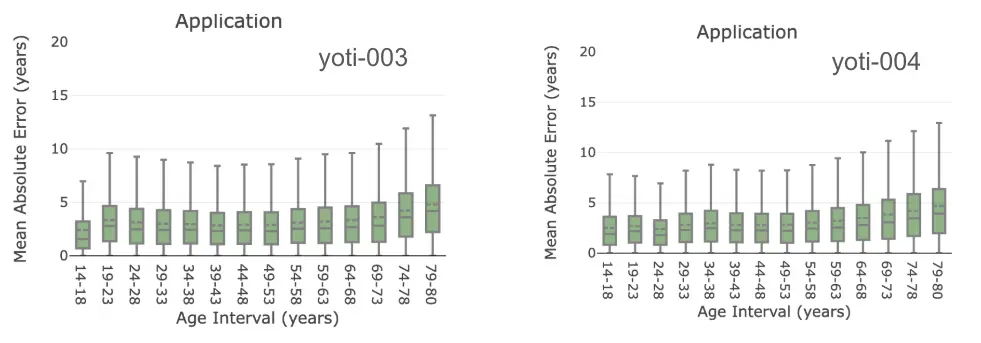

Our approach to improving our overall MAE was to reduce the error rate for the 18-30 age group, without materially sacrificing accuracy on older and younger persons.

The 18-30 age group covers many new and existing legislative developments, and is what our clients require to meet this legislation. As you can see below, our 39+ evaluation is very close between the two models, but with significant improvement in younger ages.

Bias across gender and skintone

NIST uses geographical regions as a proxy for skintone, whilst acknowledging this is not a perfect solution.

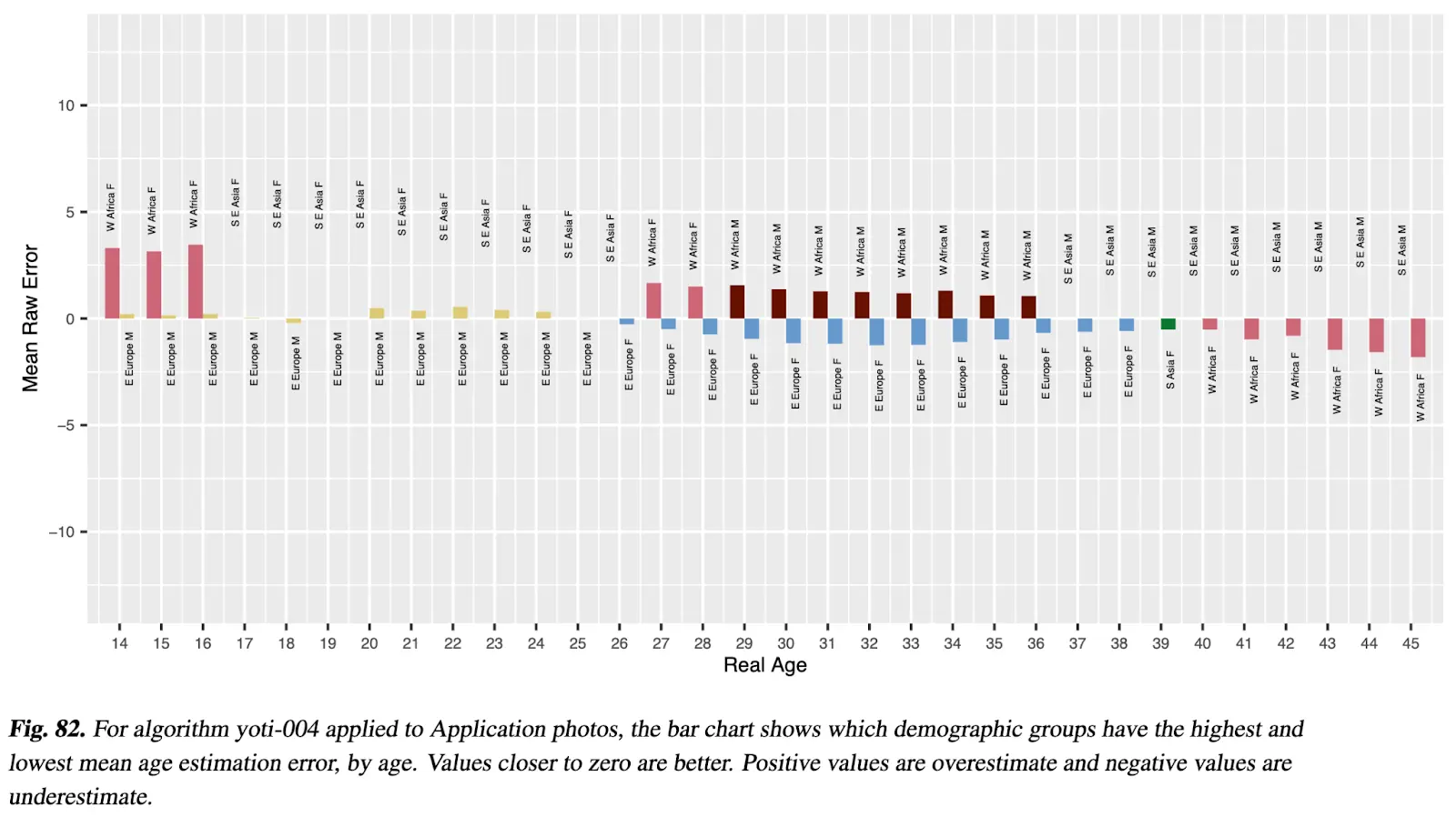

Below you can see Yoti’s performance across gender and region, where the MAE have the highest and lowest error rate. Closer to zero (the smaller the bar) the better, across the chart, is desirable.

As you can see – we underperform across 14-16 year old females. This is why independent testing is critical – it shows us where we need to work on our model for specific demographics.

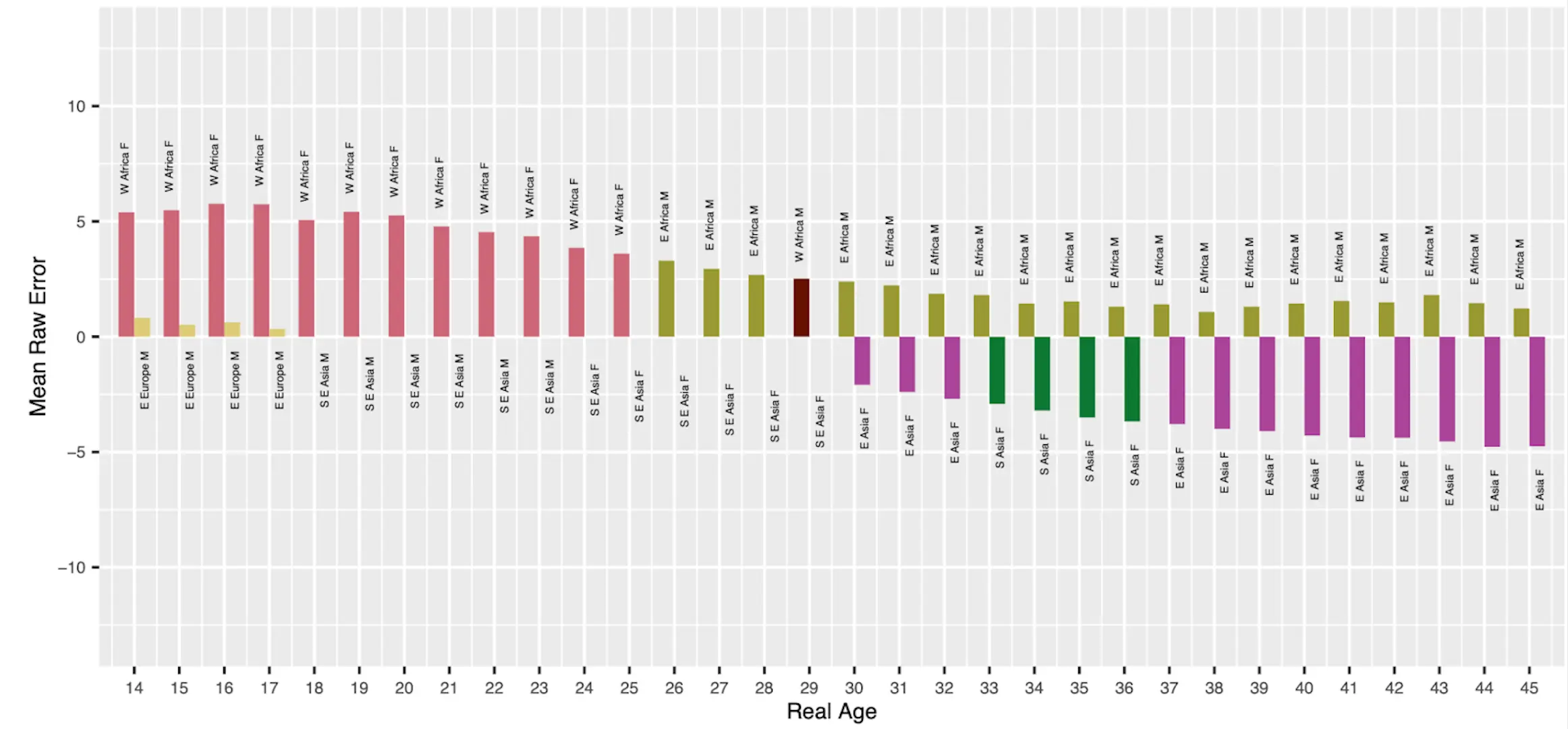

Here, as an example, is a model from an alternative vendor who is one of the other top 5 models:

Minimising bias is not just one of our principles, but is something regulators and businesses demand. We have always publicly released our own accuracy testing across age, gender and skin tone. Our goal is to minimise bias for everyone, and we recognise demographics where we need to improve, and focus resources into resolving those issues.

Most robust model

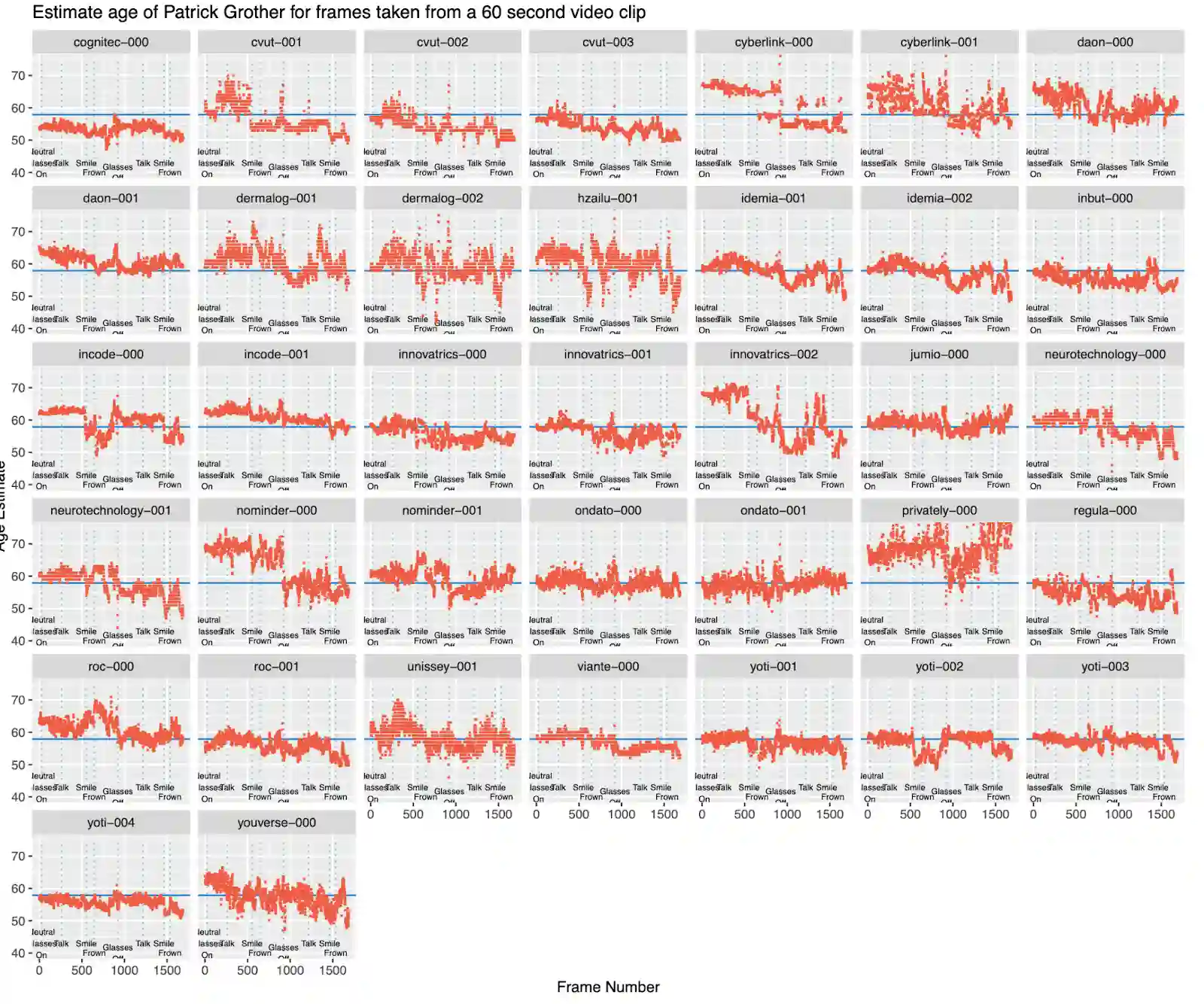

Our models are relatively robust (invariant) to facial expressions. This is an early experimental test with NIST, but an important and indicative one. This involves adding and removing glasses and changing facial expression – smiling, frowning, talking and just being your neutral self. This test measures the difference in age estimation across those scenarios.

The charts below show the difference in age estimation of a video of a person changing their facial expressions, then putting on glasses and repeating the same. The primary goal for a robust model would be to stay close to the blue line (the actual age of the individual). A secondary goal to have as little variance as possible across the test.

Here, Yoti comes top with the lowest noise. Yoti’s latest model (yoti-004) is bottom left.

Summary

Balancing model performance across all of these various metrics is a challenge, but important for online safety and for businesses to meet their obligations, as well as for regulators to feel satisfied that high assurance age checks are being performed well, and in a balanced way.

At Yoti, it is one of our principles that we build technology that works for all – we strive to build technology that performs fairly. That has led us to build a model from the start in a way that doesn’t just rely on increasing amounts of data to improve. All of this data is publicly available on the NIST website.

Combined with our world leading liveness and SICAP, businesses can have confidence that they are using a world class age check solution.

To learn more about facial age estimation, you can read our white paper or get in touch.

– Erlend, Head of Research & Development