Deepfakes are a hot topic right now. Taylor Swift recently became the victim of a deepfake scam; firstly an AI generated video of her promoted a fake cookware competition, and then explicit AI images of her went viral online. AI voice cloning technology pretending to be President Joe Biden tried discouraging people from voting in the polls. And celebrities including Piers Morgan, Nigella Lawson and Oprah Winfrey found deepfake adverts of them online endorsing an influencer’s controversial self-help course.

But it’s not just celebrities and public figures who are at risk of deepfakes scams. Fraudsters are also using deepfake technology to scam ordinary people.

Are you dating a deepfake?

Fraudsters are increasingly using deepfakes – a digitally manipulated image or video that can look convincingly real – to trick innocent people on dating sites. Whilst genuine daters are looking for love, fraudsters are using digitally altered photos and fake videos to create realistic personas. They are then using these false identities to have live video conversations with daters. It’s scarily realistic.

Genuine daters believe they are talking to a real person. In reality, they are having a video conversation with a fraudster hiding behind the fake persona. In one instance, a dater thought she was in a two year relationship and was tricked into parting with £350,000.

It’s not just the financial loss; the emotional distress these scams cause can also be devastating.

Beware of AI voice scams

This isn’t only happening on dating sites though. Any platform or app which allows people to communicate with one another is at risk, including online marketplaces, social media and even regular video and phone calls.

The victim thinks they are having a phone call with their friend, when it’s actually a scammer hiding behind an AI generated voice or video. The fraudster uses AI to pose as the friend; they might then claim to be in trouble, saying they need some money. You get the idea…

And this is happening more often than you might think. In a recent study, 77% of people had fallen victim to AI voice scams and lost money as a result. In one instance, an employee was conned into paying £20 million of her company’s money to fraudsters in a deepfake video conference call.

It’s become such a problem that the Federal Communications Commission (FCC) has now made all robocalls using AI-generated voices illegal. This gives state attorneys general the ability to take action against scammers using AI voice cloning technology in their calls.

Protect yourself from deepfakes with a Digital ID

One way to protect yourself from deepfake scams is with a Digital ID.

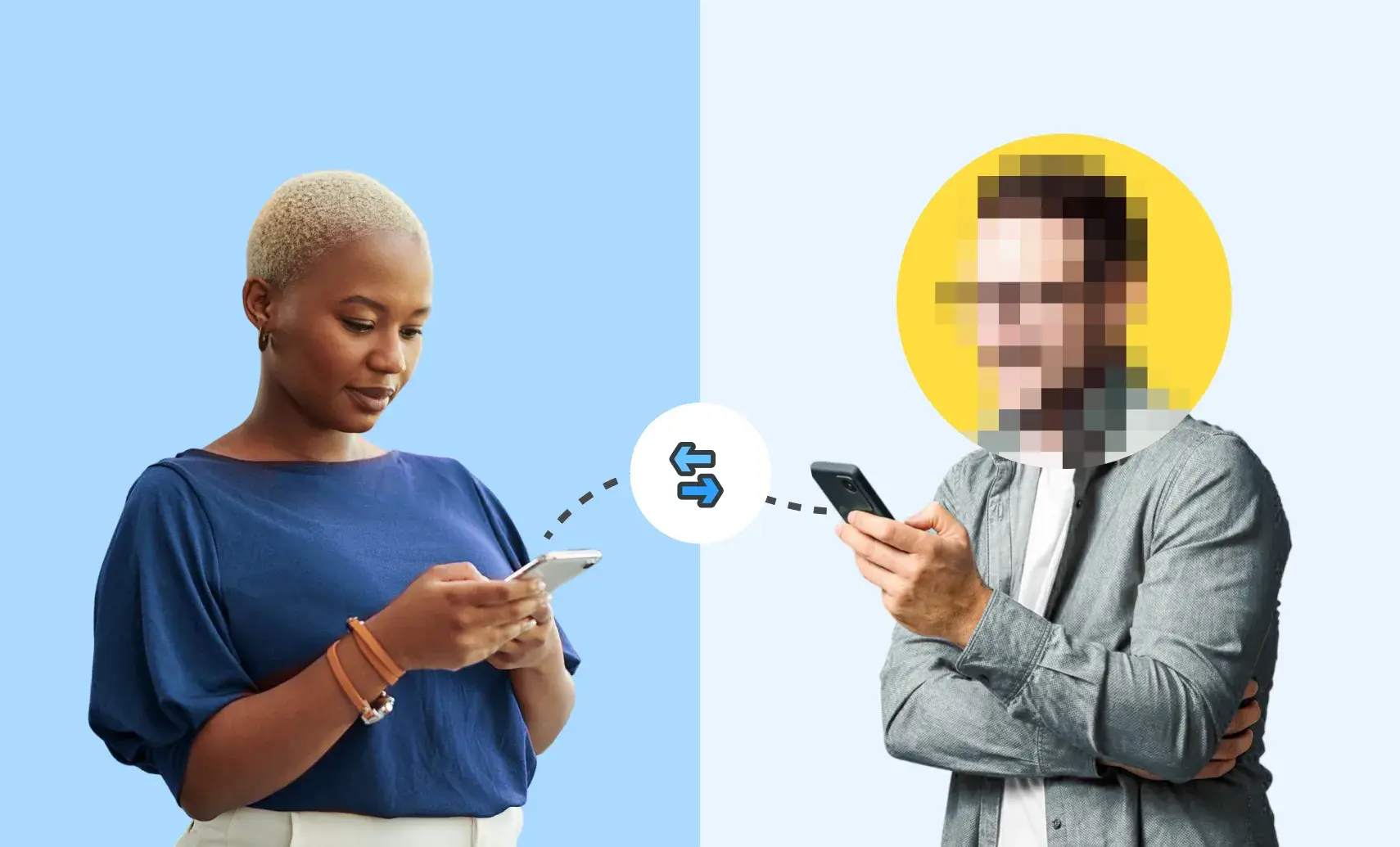

If you’re in doubt about who you’re speaking to, our free Digital ID app lets you swap verified details with another person, like your name and photo. This gives you confidence and reassurance that you’re talking to a real person – and to the correct person. After all, a scammer who is using a convincing deepfake video can’t share verified details about the person they are impersonating.

Swapping verified details with another person is a quick and simple way to be confident that the person you are messaging or speaking to is the person they claim to be. If they’re genuine, they will also appreciate this – giving both of you peace of mind you’re talking to a real person. This creates trust very quickly and is a simple yet effective way to protect yourself from deepfake scams.